Key Performance Metrics: How to grade your mainframe?

REMINDER – 2017 A.A. Michelson Award Call for Nominations

June 23, 2017CMG Journal Summer Issue 2

June 26, 2017Performance management is one of the key focus of any platform. A system may have several resources (CPU, I/O, storage, network etc.) that collectively work together to process a mission critical workloads. To assess overall health of the system, mainframe system reports can be reviewed on certain key performance metrics on these resources. These metrics are measured and compared against the service level agreements (SLA), or performance Rule-of-Thumb standards. An SLA is contract between the user and system that describes the goals to meet for business critical workloads. If results are not as expected, then mission critical workloads running on the system will usually suffer. Some of possible remedies could be, performance tuning to get metrics results to base standards or buy more resources or offload eligible workloads to specialty engines or steal from less critical work by adjusting priorities.

Types of workloads

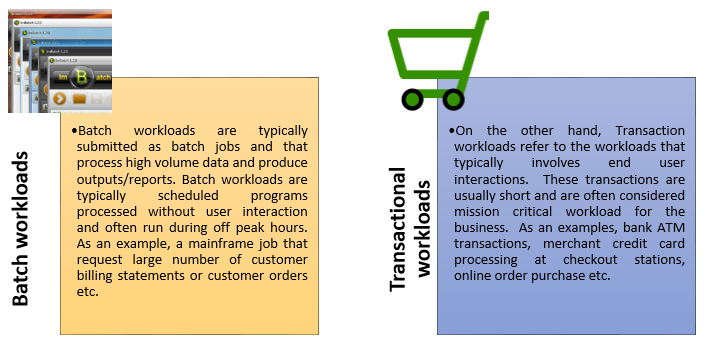

First let’s take a look at typical workloads that run on mainframe systems. Workloads that run on mainframe can be classified into 2 flavors – Batch processing or Online transactional processing.

Performance metrics

Below are some of key metrics to be used to gauge the system performance. Mainframe system regularly captures and provides these metrics data that various performance monitoring tools use to display to end users.

Average Throughput

The average throughput is the average number of service completions per unit time. Example, number of transactions per second or minute. Transaction workloads are typically measured using this performance metric.

Average Response Time

The average response time is the measure of average amount time it takes to complete single service. Transactional workloads are usually measured using this performance metric. This metric can also be specified as SLA goal for workloads

Resource Utilization

Resource utilization metric is the measure of time resource was busy. Example CPU utilization, Processor storage utilization, I/O rates, paging rates etc. Typically, the amount of time the workloads (batch or transaction) consumed resources over a period of time.

Resource Velocity

Velocity is measure of resource contention. When multiple workloads require a resource (example CPU) at the same time then there is contention for the resource. While one workload is using the resource other workloads are put in waiting queue. Resource velocity is the ratio of time taken for using the resource (A) to the total time spent using resource (A) and waiting in the queue (B). i.e. A / (A+B). This value is expressed as percentage of 0-100 range. A value of 0 mean, high amount of contention for resource and value of 100 mean no contention. This metric can be specified as SLA goal for workloads.

Performance Index

As part of SLA, workloads are classified as service classes and each service class has goal. Goals for workloads can be expressed as response time, velocity, etc. Since there are several types of goals defined for various workloads in SLA, to determine how workloads are performing with respect to their defined goals, a simple metric performance index(PI) is used. PI is simply a ratio of defined goal vs achieved goal. A PI value of 1 means workloads are meeting goals. A value < 1 mean, workloads are exceeding the goals and value > 1 mean, workloads are missing goals.

Author: Hemanth Rama is a senior software engineer at BMC Software. He has 11+ years of working experience in IT. He holds 1 patent and 2 pending patent applications. He works on BMC Mainview for z/OS, CMF Monitor, Sysprog Services product lines and has lead several projects. More recently he is working on Intelligent Capping for zEnterprise (iCap) product which optimizes MLC cost. He holds master degree in computer science from Northern Illinois University. He writes regularly on LinkedIn pulse, BMC communities and his personal blog.

Want more about Performance Measurement and Reporting?

From the 2016 Proceedings:

- Capacity Planning & Performance Reporting by Ellen Friedman and Roger Lee

- Windows System Performance Measurement and Analysis by Jeffry Schwartz

Not a member but want access? Join today at https://www.cmg.org/become-a-member/